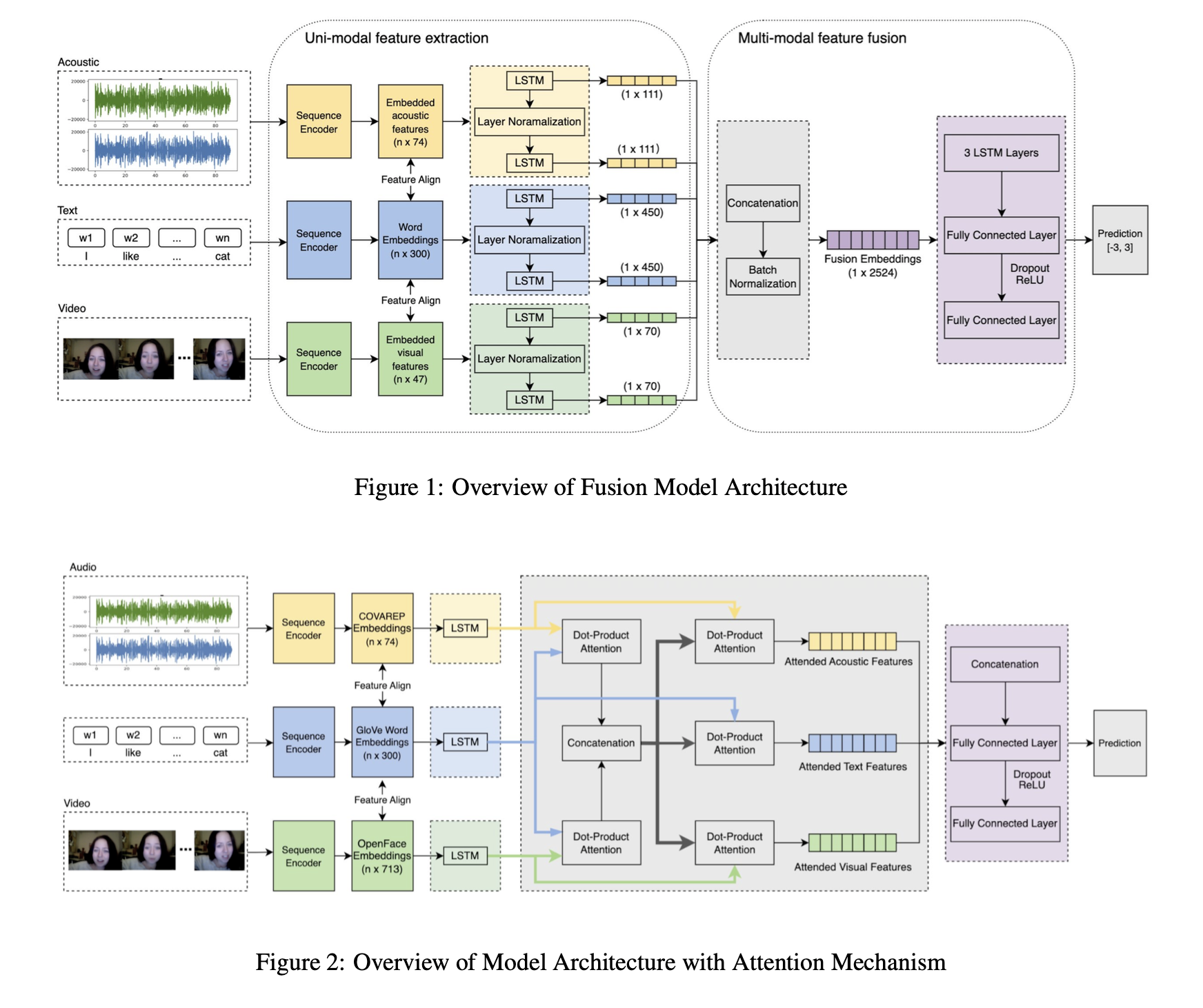

A Multi-modal Approach to Sentiment Analysis and Emotion Recognition

Keywords: Deep Learning; Neural Model Training; LSTM; Attention; Multi-Modal; Sentiment Analysis

Abstract

Multimodal sentiment analysis (MSA) and emotion recognition in conversation (ERC) tasks aim to identify emotions and opinions in natural language processing (NLP). In this paper, we explore different approaches to MSA and ERC, implementing uni-modal classifiers and fusing multiple modalities. The models are evaluated on the CMU-MOSI and CMU-MOSEI datasets. Results show that deep learning models outperform the SVR-based baseline, and multimodal fusion improves performance. The addition of attention mechanisms further enhances the models’ capabilities. The adapted model achieves competitive results on sentiment analysis and emotion detection tasks. This study provides insights into the model architecture and performance evaluation for MSA and ERC.